JavaScript's iterator helpers are fast for large arrays and deep transformation chains

Published on

Updated on

Some people responded to my claim about the potential memory-efficiency of iterator helpers by telling that using iterator helpers are not worth it. Their main point is that JavaScript arrays are ultra optimized in terms of performance and garbage collection and the iterator helpers will usually be much slower without even offering any significant memory-saving benefits. So I decided to do some benchmarks with arrays of different sizes and different array transformation chain depths. Here I'll only focus on the execution speed since measuring memory usage is more complicated.

The benchmark

I decided to do the benchmarks with jsbenchmark.com on Chrome 137, Windows 11 and AMD Ryzen 5000U.

The core idea of the benchmark

The idea is to create an array of a certain size filled with random numbers and benchmark the same array

transformations via the iterator helper

(iterable = array.values())

and the traditional way (iterable = array).

- The benchmarks will be done with 3 array sizes: 2000, 20000, and 200000.

-

There will be a chain with maximum of 5 transformations +

reduce(). Here are all the 5 transformations with thereduce()that will be used in the benchmark:iterable .filter(x => x < 0.9) .filter(x => x > 0.1) .map(x => x + 10) .map(x => x * 10) .map(x => x * x) .reduce((acc, cur) => acc + cur, 0);In order to benchmark with different transformation chain depths, the transformations (all that come beforereduce()) will be added gradually. For example, with 1 transformation it will be like this:iterable .filter(x => x < 0.9) .reduce((acc, cur) => acc + cur, 0);With 2 transformations it will be like this:iterable .filter(x => x < 0.9) .filter(x => x > 0.1) .reduce((acc, cur) => acc + cur, 0);And so on until reaching the shown 5 transformations.

Since testing for all array sizes and all transformation chain depths will require too many benchmarks (3 * 5 = 15), I'll do benchmarks only for 1 and 5 transformations for each array size, so it will require only 3 * 2 = 6 benchmarks. I think 6 benchmarks are good enough to see the patterns without being overwhelmed.

The setup

In the benchmark setup we'll generate an array filled with random numbers:

return Array.from({length: N}, () => Math.random())

N is the size of the array and can be 2000, 20000, 200000.

The results

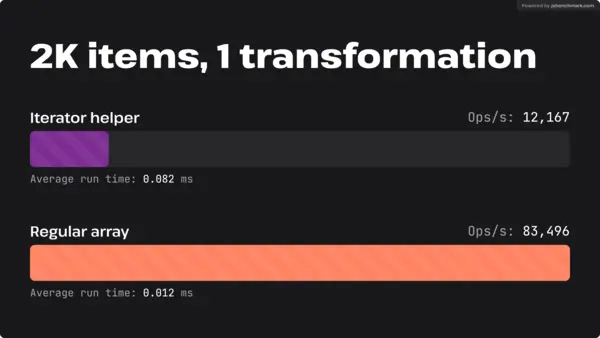

For the smallest array and only 1 transformation it seems regular array transformations are much more efficient. However, this doesn't seem to be surprising. Also, I was suggesting to use iterator helpers for huge arrays, not for small ones.

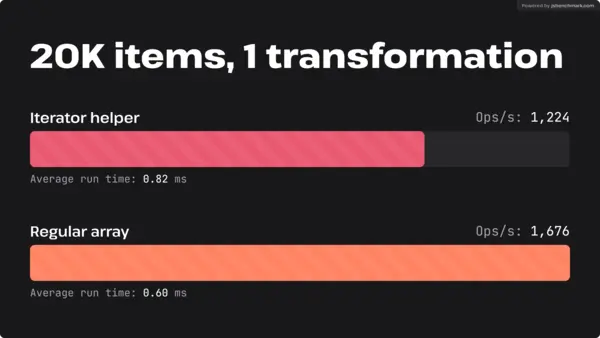

As the size increases, the gap shrinks very substantially. This seem to support my theory of the advantage of the iterator helpers for huge arrays.

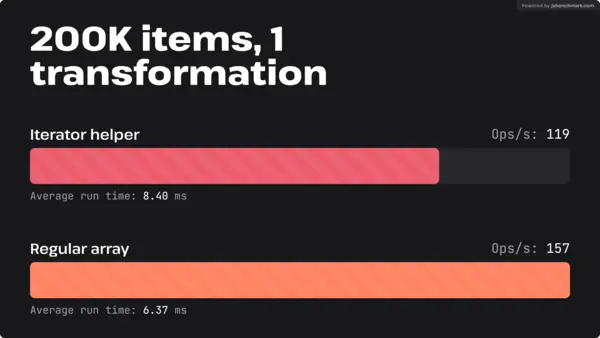

The gap shrinks further, but very slightly this time. It will probably not reach the performance of the regular array transformations. It looks like temporary allocations are not cheap for the performance.

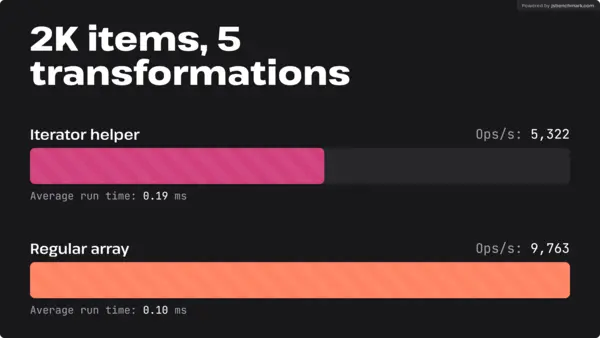

Now let's see what happens if we use much deeper transformation chains. I predict much better results for the iterator helpers because this means more temporary array allocations for regular array transformations.

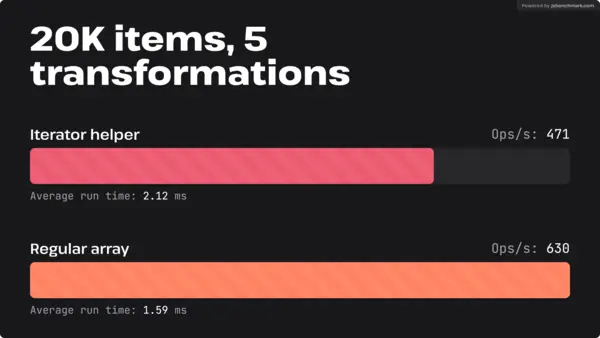

Like the 2K case for single transformation, the iterator helpers perform worse, but the gap is not as dramatic. Looks like the deeper chain causes more temporary array allocations for regular array transformations, and they start to perform worse from that.

The result is similar to the 20K and single transformation case. Both perform absolutely slower compared to the single transformation case, but the relative ratio is very similar to the 20K and single transformation case.

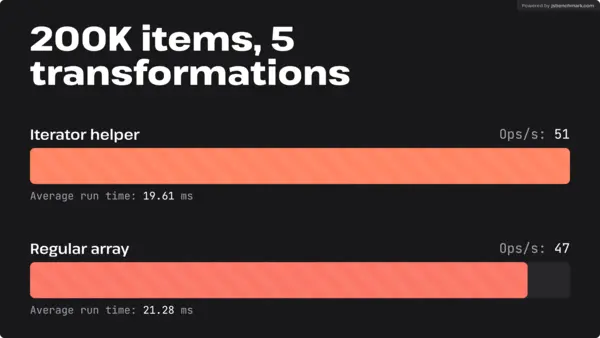

And finally, the iterator helpers slightly outperformed the regular array transformations. Honestly, my main point was the memory usage reduction instead of the execution speed, but I'm pleasantly surprised that iterator helper transformations can even work faster than regular array transformations for huge arrays and deep transformation chains. I also tested with perf.link and got a similar result.

Conclusion

On Chromium based browsers iterator helper transformations are not only potentially more memory-efficient (although measurements are needed) than regular array transformations, but can also work faster, which is a bit surprising. Of course, on different machines the results might differ somewhat because different CPUs, OSes, browser engine optimizations, etc can have an effect on the execution speed, but it still shows the overall pattern: iterator helper transformations start to perform not as bad or even better when the array size is huge and the transformation chain is deeper.