Some software bloat is OK

Published on

In the era of fast CPUs, gigabytes of RAM, and terabytes of storage software efficiency has become an overlooked thing. Many believe there are less reasons to optimize software today, as processors are fast and memory is cheap. They claim that we should focus on other things, such as developers' efficiency, maintainability, fast prototyping etc. They often recall Donald Knuth's famous quote:

Premature optimization is the root of all evil.

How bad is software bloat nowadays?

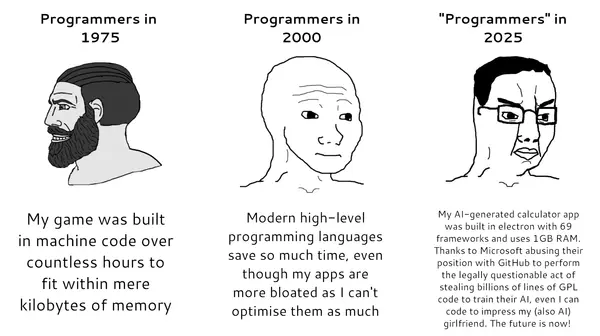

Historically computers had much less computing power and memory. CPUs and memory were expensive. Programmers were much more constrained by the CPU speed and available memory. A lot of work had to be done in order to fit the program into these limited resources. It's no surprise that for 1970-80s era programs it was a normal thing to be written in very low-level languages, such as machine code or assembly as they give the programmers ultimate control over every byte and processor instruction used.

Over time, memory and CPUs became cheaper (all the hardware overall). This reduced the constraints and allowed to use higher level languages and eventually led to the rise of languages with garbage collection (Java, C#, PHP, Python, JavaScript, etc).

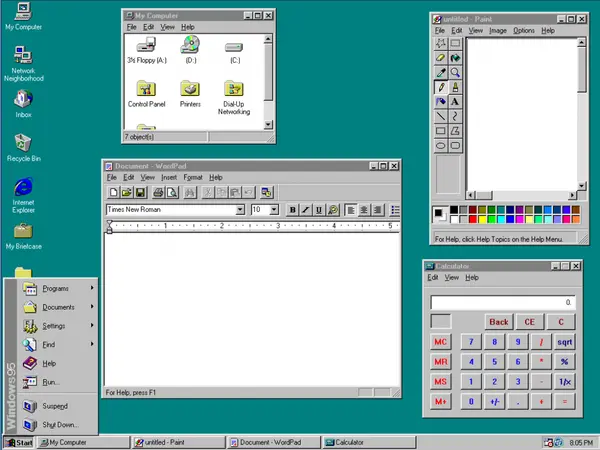

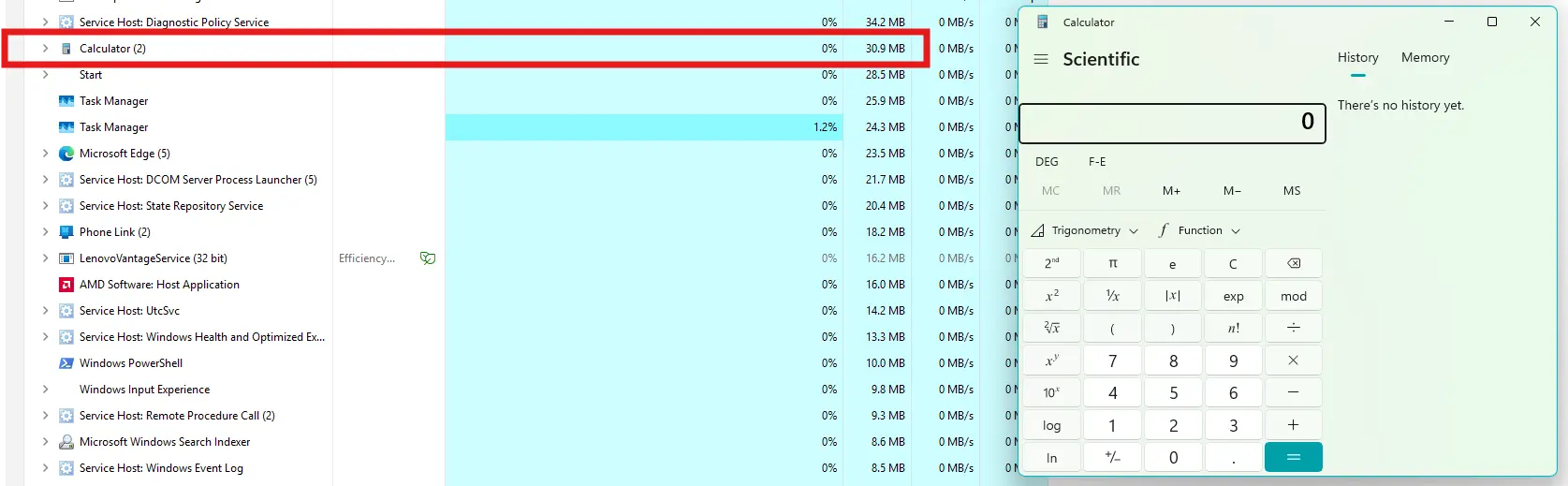

Sometimes when you compare the system requirements of older and newer generation software, you become shocked. Just compare the system requirements of, let's say Windows 11 Calculator alone (let alone the full OS), and the whole Windows 95 OS! WIndows 11 Calculator alone consumes over 30MiB of RAM (even this might be an underestimation because shared memory is not included), while Windows 95 could work even with 4MiB of RAM.

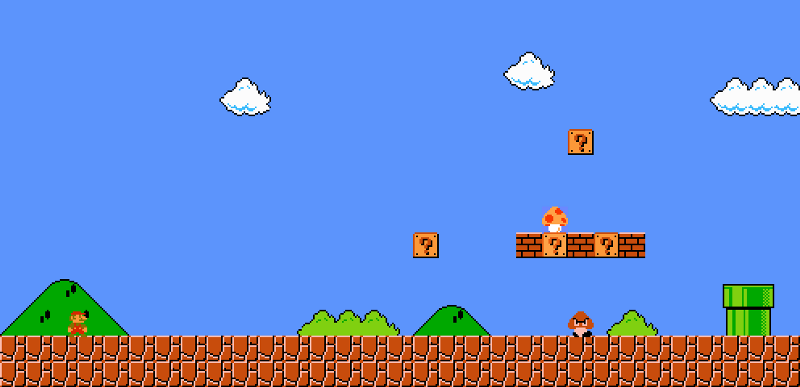

Here is another more dramatic example. Super Mario Bros. game was just 31KiB (or 40KiB, still doesn't change much anything) and used only 2KiB of RAM. But this high quality (preserved pixels from original, lossless) WebP image below is almost 54KiB. Larger than the game itself!

A significant part of this bloat is actually a tradeoff

From the first glance this seems insane, the developers probably don't care about efficiency, right? Well, it's more complicated, and actually a significant part of this isn't caused by the developers' incompetence or laziness.

Today's software solves some problems that were less of a concern in 1990s and before. Here are some examples:

- Layers & frameworks. Modern software tends to be more complicated. Most of the time people don't write software from scratch, they usually use some library or framework. For example, the mentioned Windows 11 Calculator is a UWP / WinUI / XAML app with C++ / WinRT. You're loading a UI framework, layout engine, localization, input, vector icons, theming, high-DPI, etc. Those shared DLLs live somewhere in RAM even if Task Manager only shows part of them in the app's "Working Set".

- Security & isolation. Nowadays security is very important. Sandboxes, code integrity, ASLR, CFG, data execution prevention, etc. add processes, mappings, and metadata. This didn't exist much in older era software.

- Robustness & error handling / reporting. Related to the previous one. Since modern software is usually complicated and has tons of edge cases (and sometimes integrated with other third parties), this requires to handle and log all the possible errors / failures. All these safety measures also add extra code.

- Globalization & accessibility. Full Unicode, RTL, complex script support, screen readers, keyboard navigation, high-contrast / animations bring code and resources.

- Containers & virtualization. Bundle the app with its exact runtime/deps into an image, run it as a container on any host with Docker. Same container in dev, CI, staging, prod means fewer environment drift bugs. VMs are great when in addition to this something should be run on an OS that is not compatible with the host OS.

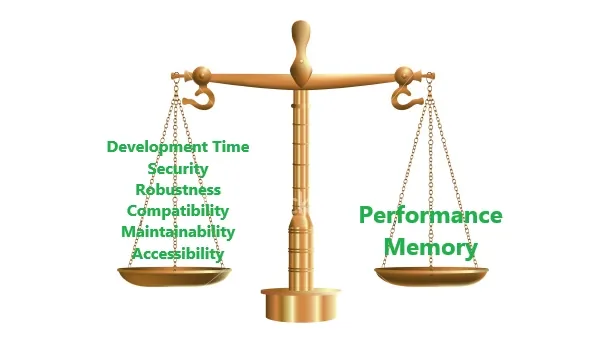

- Engineering trade-offs. We accept a larger baseline to ship faster, safer code across many devices. Hardware grew ~three orders of magnitude. Developer time is often more valuable than RAM or CPU cycles. Also modern software is usually developed by many people (sometimes even organizations) which adds a need of a code structure that makes it easier to maintain and collaborate, such as modularity, extensibility / flexibility, code / architectural patterns, etc. I mean just try to add some feature to an ancient ultra-optimized game written entirely in assembly by some hobbyist. Without the help of the author it will probably be a very challenging task: the code will be much harder to understand (more like reverse engineer), the changes might break some "clever but fragile" code, etc. We certainly don't want that.

But yeah, a significant part of the bloat is also bad

That being said, a lot of this bloat is also not a result of a tradeoff, but incompetence or laziness. For example, using libraries or frameworks for trivial things or not understanding algorithmic complexity. Many websites are notoriously bloated by having dozens (sometimes hundreds) of questionable dependencies, they don't only degrade performance, they can cause security issues and maintenance problems. And nowadays AI is a multiplier of such problems.

Another source of bloat is over-engineering:

- Microservices for a tiny app.

- Generic plugin systems.

- DI forests.

- "Just in case" interfaces that are used once.

- SPA + global state for static content (heavy hydration for simple pages).

- Multiple build steps/tools for marginal gains.

Finally, this obsession with containers is also concerning. Containers often cause increased startup time (quite long even on SSDs), RAM and CPU usage (looking at you, Ubuntu Snap). Sadly, containers are very appealing as an easy crutch for mitigating compatibility and security issues for ordinary desktop apps.

"Bottlenecks" still exist and are still optimized

There are still highly demanded optimized programs or parts of such programs which won't disappear any time soon. Here is a small fraction of such software:

- Codecs: dav1d (AV1), x264 / x265 (H.264 / 265), libjpeg-turbo.

- Archivers / compressors: zstd, xz/LZMA, brotli, libarchive.

- VMs / JITs: HotSpot (JIT tiers + GC tuning), V8 / JavaScriptCore (inline caches, TurboFan), LuaJIT (trace JIT).

- Std libs: stdlib, musl (small, predictable), modern C++ STL (small-buffer opt, node vs flat containers), Rust std (zero-cost iterators).

- Crypto: OpenSSL / BoringSSL / LibreSSL (constant-time, vectorized primitives).

- GPU drivers.

- Game engines.

- OS kernels.

Such software will always exist, it just moved to some niche or became a lower level "backbone" of other higher level software.

Conclusion

Some bloat is actually OK and it has benefits. Without some bloat we would not have so much innovation. Sure, we could make many things ultra-optimized, we could reduce many apps' sizes by a factor of 10-100. But most of the time it will be just an unworthy exchange of the developers' time for a Pyrrhic victory. On the other hand, like most other things, the bloat becomes harmful when it's not in moderation which we also see.